Our team worked in collaboration with Lighter to review their custom ZK circuits for a verifiable orderbook matching. The code was found to be solid and well-organized. It was a pleasure working with Lighter’s engineers, who were highly cooperative.

You can read the full report here.

The rest of this post includes a copy/paste of the overview section of the report.

Overview of the Lighter circuit

Lighter is an exchange implemented as a Layer 2 (L2), as its state transitions are verified and finalized on a Layer 1 (L1). The L1 ensures that each state transition is valid by verifying zero-knowledge proofs, making Lighter a ZK rollup. Zero-knowledge proofs not only prove that a series of state transitions were executed correctly, but also expose compressed summaries of sub state transitions to the L1.

Exposing sub state transitions to the L1 is important for two reasons: not only some of the transitions require additional execution of logic and enforcement of policy on the L1, but issues arising in the operation of the service must not lead to a complete stop of the system. Thanks to data being published on the L1, in case of emergency any L1 user is able to recompute the latest state of the L2 by replaying all of the exposed diffs.

There are two main circuits supporting the Lighter L2 application, comprising what we call the zkLighter circuits:

- The main operation circuit, which is supposed to be the main circuit, for as long as the system operates normally.

- The exit hatch circuit, so-called “desert” circuit in the code, which takes over in case of an emergency and allows users to exit the system. See the end of this overview for more information.

In the rest of the overview, we will abuse the term zkLighter circuits to refer to the first circuit.

The state of the application

In order to provide updates on a persistent storage, the storage of Lighter is constructed so that it can be authenticated by Merkle trees. An update can take a Merkle tree root which authenticates the state of the application fully, and produce an updated Merkle tree root which can be saved as an authenticated snapshot of the new state.

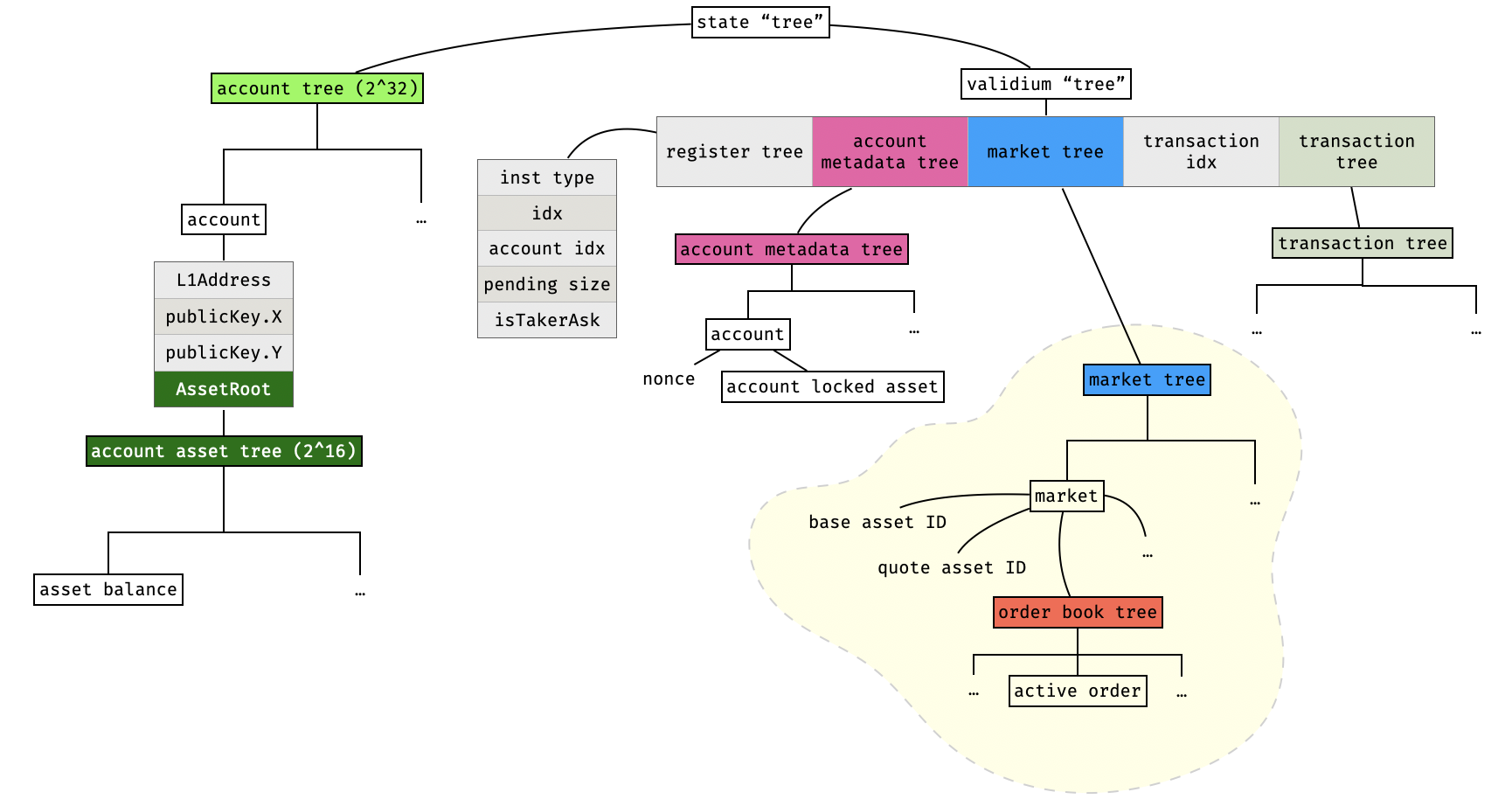

We can summarize the whole state of Lighter as a hypertree (tree of trees) as follows:

There are 6 real Merkle trees in the above diagram, which are assigned to a different color. The other “trees” are just hashes of their inputs, and do not have internal nodes.

Notice that an account’s state is split in two different trees: the one on the left contains all account information except for the amount of assets locked in orders that have been inserted in order books. This extra information is contained in a “account metadata” tree for optimization purposes.

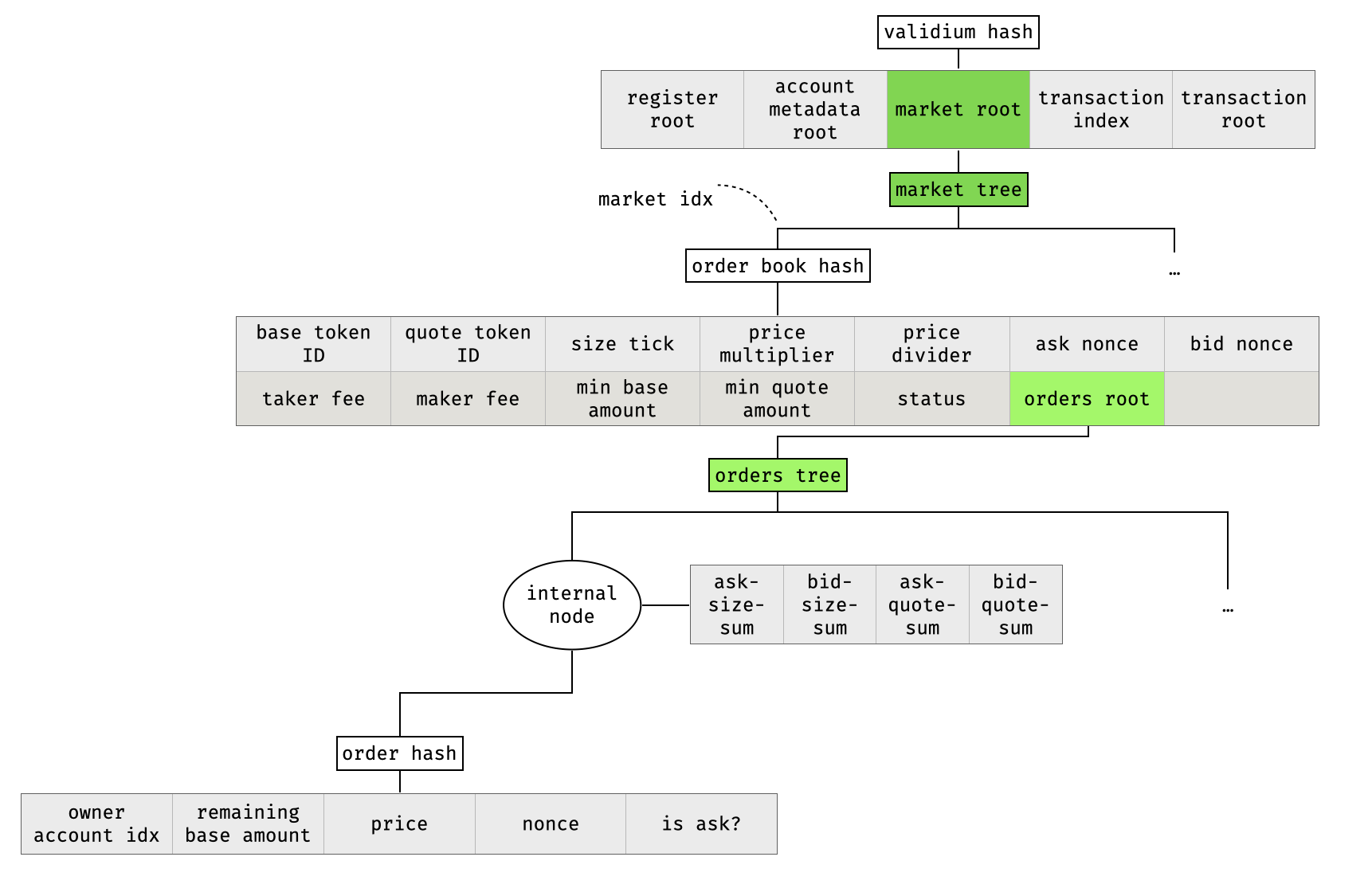

The order book tree of a market is quite special, as it is quite a large tree (of height 72) and contains extra information in all of its internal nodes. So it is worth taking a look at it in isolation:

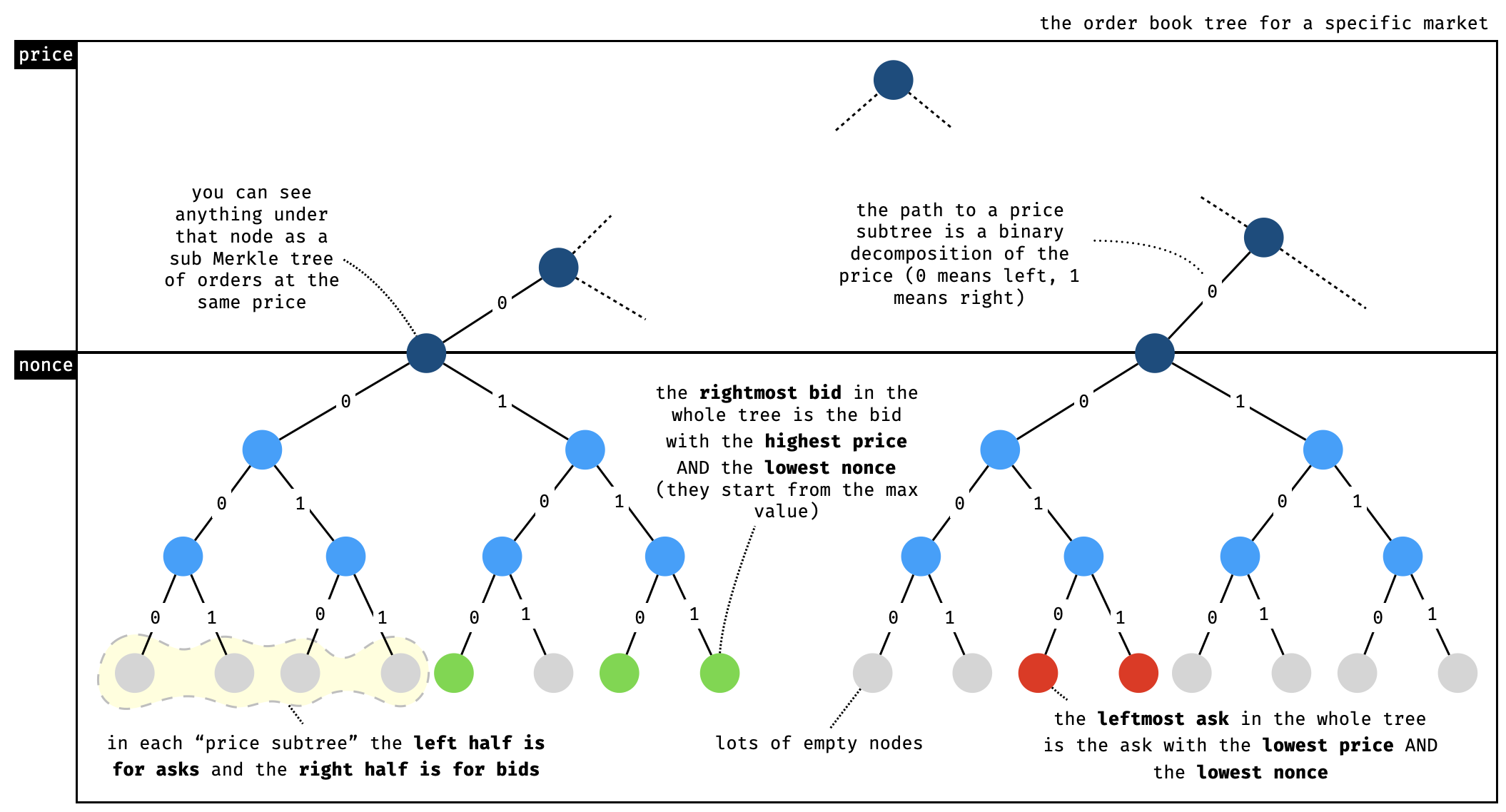

Orders in the order book are stored under a special path, calculated based on the price and a nonce. As the price is the first part of the path, the leaves are ordered from lowest to highest price, and will naturally paint the tree with bid orders on the left side and ask orders on the right side (and a visible spread in the middle).

There should always be a gap somewhere in the tree, with bids on the left side and asks on the right side of the gap, making up the spread.

Note that while asks start being indexed at zero, bids start from the maximum nonce value. This design choice leads to the previous observation on higher priority orders, but also to a special case when asks and bids are crossing in the same “price subtree”: if an ask is ever inserted in the same subtree as other bids, then these other bids can be seen as crossing orders to the matched part if the incoming order and will be found on the right side of the ask (and vice versa for a bid inserted in a price subtree with several asks). This observation will be useful later when we discuss the matching engine.

zkLighter blocks

The main object handled by the zkLighter circuit is a block. As in your typical blockchain, a block contains a list of transactions and produces a state transition by applying these transactions to a known previous state (as attested to by the previous block), and attests to the updated state.

Transactions are “executed” one by one, in a way that ensures no cheating in the matching algorithm. That is, that trades are optimal, as long as the ordering of the transactions is done in a fair manner.

Note that some transactions are not real user transactions, but inserted “fake” transactions that help finalize the execution of some types of user transactions (specifically, transactions that might require several “cycles” to complete). We explain this in more detail in later sections.

The logic of a block goes like this:

- Ensure that the first transaction builds on the block’s old state root.

- Verify each transaction, ensuring on the way that each transaction builds on the previous transaction’s output state.

- Expose as public input the block number, a timestamp, the root state before and after the state transition (as the L1 will need to verify that the state transition built on the latest recorded state), other useful roots of Merkle trees (validium and transaction trees), and finally a vector of public data which describes some of the transactions that made up the state transition.

We summarize the same logic in pseudo-code as follows:

def verify_block(public, private):

# init

pub_data = [0] * BLOCK_TXS * 24

pos = 0

# check first transaction

assert(private.old_state_root == private.txs[0].state_root_before)

pending_pub_data, tx_pub_data_size, roots = verify_transaction(private.txs[0], private.created_at, roots)

copy(pub_data[pos:], pending_pub_data) # via multiplexer

pos += tx_pub_data_size

# check the rest of transactions

for i in range(1, BLOCK_TXS):

assert(private.txs[i-1].state_root_after == private.txs[i].state_root_before)

pending_pub_data, tx_pub_data_size, roots = verify_transaction(private.txs[i], private.created_at, roots)

copy(pub_data[pos:], pending_pub_data) # via multiplexer

pos += tx_pub_data_size

# compute new state root (unnecessary as compute)

new_state_root = private.txs[i-1].state_root_after

[new_account_root, new_validium_root, new_transaction_root] = roots

# create commitment as public output

tx_pub_data_hash = sha256(pub_data)

commitment_data = [ # 176 Bytes in total:

private.block_number, # 8

private.created_at, # 8

private.old_state_root, # 32

new_state_root, # 32

new_validium_root, # 32

new_transaction_root, # 32

tx_pub_data_hash, # 32

]

commitment = sha256(commitment_data)

assert(commitment == public.block_commitment)

Types of transactions

Most of the logic in zkLighter comes from the execution of a cycle, where a cycle contains the logic that can execute a transaction. As previously mentioned, a transaction can be a user transaction or a “fake” transaction that helps drive the execution of a user transaction in multiple cycles.

One way to categorize transactions is to split them in different categories. Some of the transactions are L2 transactions as they are authenticated by the public key contained in the L2 account state:

- Transfer. This is a direct transfer of assets between accounts in the L2.

- Withdraw. This is a withdraw transaction from the L2 to the L1.

- CreateOrder. This creates an order in some market.

- CancelOrder. This cancels an order in some market.

- ChangePubKey. This allows a user to update their account’s public key. Note that this transaction is not “purely” an L2 transaction as it is authenticated mostly on the L1: the L1 contract’s logic is in charge of ensuring that the account’s associated L1 address is the

msg.sender.

Some of the other transactions are deemed L1 transactions and are submitted to the L1 contract. We presume that when a block exposes state transitions coming from such transactions, the L1 logic will ensure that they were submitted on the L1 too. These transaction types are:

- CreateOrderBook. An admin on the L1 should be able to create a new market to trade a pair of assets.

- UpdateOrderBook. An admin on the L1 should be able to update information for an existing market.

- Deposit. This allows an L1 user to deposit some specific asset in any account on the L2.

- FullExit. This allows a user to empty their account for a specific asset. This means that not only the account of the user will be drained, but all orders for that asset (in any market where that asset could be a pair) will be canceled. Note that this transaction exists to ensure that users can still exit the system even when the system is censoring them (so unlike withdraw transactions, these are posted and authenticated on the L1).

Finally, there are two types of “fake” transactions that are used to finalize the execution of some of the previous user transactions:

- ClaimOrder. This transaction is used to continue processing a CreateOrder transaction, as a taker order might remove and update several maker orders in a market’s order book.

- ExitOrder. This transaction is used to continue processing a FullExit transaction, as the prover requires as many cycles as there are orders associated with that exit transaction.

To help keep track of the status of the execution of a user transaction across cycles, an additional variable named “register” is used. Since there is no theoretical limit to the number of fake transactions that need to be inserted as part of the execution of a single user transaction, the register is also a persistent variable across blocks (as can be seen in the first state diagram).

A note on unrolled logic

The logic of zkLighter is encoded in a zero-knowledge circuit, due to this loops have to be unrolled and real branching cannot occur. This adds quite a lot of complexity to the code which has to ensure that every branch can always be computed and produce dummy results. We mention some of the techniques in this section.

Most of the techniques in this section can be reduced to using multiplexers. For example, booleans are often computed and used to ensure that only one path is taken at a time (and one transaction is set at a time):

// compute tx type

isEmptyTx := types.IsEqual(api, tx.TxType, types.TxTypeEmpty)

isChangePubKey := types.IsEqual(api, tx.TxType, types.TxTypeChangePubKey)

// TRUNCATED...

isSupportedTx := api.Add(

isEmptyTx,

isChangePubKey,

// TRUNCATED...

)

api.AssertIsEqual(isSupportedTx, 1)

// verify nonce, expired at and signature

isLayer2Tx := api.Add(

isChangePubKey,

isTransferTx,

// TRUNCATED...

)

Branches that are not taken often take such flags to avoid asserting on dummy values. To do that, asserting functions are in turn augmented to assert conditionally. For example:

func AssertIsVariableEqual(api API, isEnabled, i1, i2 Variable) {

zero := 0

i1 = api.Select(isEnabled, i1, zero)

i2 = api.Select(isEnabled, i2, zero)

api.AssertIsEqual(i1, i2)

}

In addition, seeking and writing to arrays must be done in a way that cover all possible cases:

pos := 0

inc := 1

// use incremental arrays to avoid high constraint cmp operation

// pos is used to indicate the current position to write in pubData

// inc is used to indicate whether current position needs to be incremented

// Let's say pubDataSize is 4, the values in iteration will be:

// IsEqual : 0 0 0 0 1 0 0 0 0

// Inc : 1 1 1 1 0 0 0 0 0

// Pos : 1 2 3 4 4 4 4 4 4

for i := 0; i < types.PubDataDWordSizePerTx; i++ {

// for the first tx, no need to iterate for position.

pubData[i] = pendingPubData[i]

inc = api.Sub(inc, types.IsEqual(api, i, txPubDataSize))

pos = api.Add(pos, inc)

}

Verifying transactions

A transaction in zkLighter contains all possible transactions for the reason stated above. In addition, it contains enough data to verify a signature (in case of the transaction being a user transaction), as well as witness data provided by the sequencer for the prover to apply the transaction on the state. The witness data mostly consist of the data stored in the latest state that will be mutated by the transaction, as well as Merkle proofs to authenticate the integrity of the data.

type TxConstraints struct {

// tx type

TxType Variable

// different transactions

ChangePubKeyTxInfo ChangePubKeyTxConstraints

DepositTxInfo DepositTxConstraints

TransferTxInfo TransferTxConstraints

CreateOrderBookTxInfo CreateOrderBookTxConstraints

UpdateOrderBookTxInfo UpdateOrderBookTxConstraints

CreateOrderTxInfo CreateOrderTxConstraints

CancelOrderTxInfo CancelOrderTxConstraints

ClaimOrderTxInfo ClaimOrderTxConstraints

ExitOrderTxInfo ExitOrderTxConstraints

WithdrawTxInfo WithdrawTxConstraints

FullExitTxInfo FullExitTxConstraints

// nonce

Nonce Variable

// expired at

ExpiredAt Variable

// signature

Signature SignatureConstraints

// everything else after this point is storage data that is provided by the sequencer and that can be inserted in the circuit to help execution

}

We can summarize the logic of the transaction circuit as follows:

- Figure out what transaction we are looking at.

- Hash the transaction data and verify a signature over the transaction in case of an L2 transaction.

- Compute public data from the transaction (which we will expose publicly eventually).

- Verify the fields of the transaction (the checks are different depending on the transaction).

- At this point we also verify that, if the register is non-empty, the correct transaction is associated with it.

- Calculate “deltas” – changes based on the transaction type and witnessed leaves of the state.

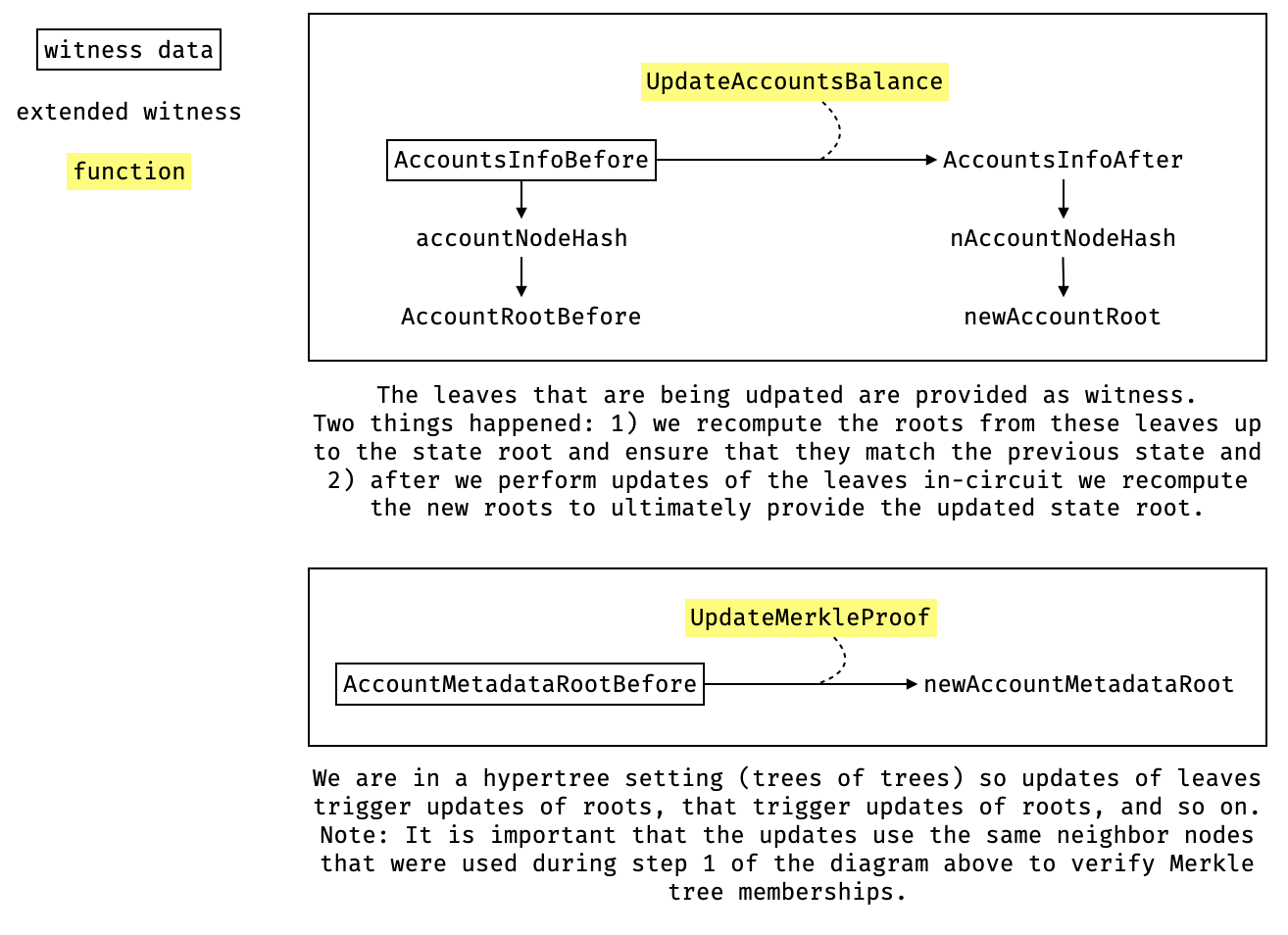

- Verify that the current witnessed leaves are in the current state. This means verifying a number of Merkle membership proofs. Up until the roots that are exposed in the public input.

- Update the now-verified leaves with the previously computed “deltas”.

- Recompute the Merkle roots (using the same proofs used to verify Merkle memberships), up to the roots that are exposed in the public input.

- Ensure that every transaction is set to zero except the transaction we’re looking at.

- Return the public data, its size (as the vector is padded with zeros), and the updated roots.

The three-step concept of a witnessed leaf being authenticated in a Merkle tree, being updated, and new roots being computed, a huge part of the logic in the protocol described above. We give an example of that three-step process in the diagram below.

Most of the complexity within this, itself a complex part of the codebase, comes from updating the state and more specifically, the matching engine responsible for performing user trades. We go over this in more detail in the next section.

Order book transactions

As stated previously, there are two types of order transactions that can be submitted by users: create order and cancel order. As its name indicates, a cancel order is merely responsible for removing a leaf in an order book, and as such this is the last time we’ll mention it.

There are four different types of create order transactions, and they all combine different techniques in order to convince users that there was no cheating on the part of the matchmaker, and that each processed trade was optimal (as long as transactions are ordered fairly). Before reviewing them we introduce a few terms (that are not part of Lighter’s documentation):

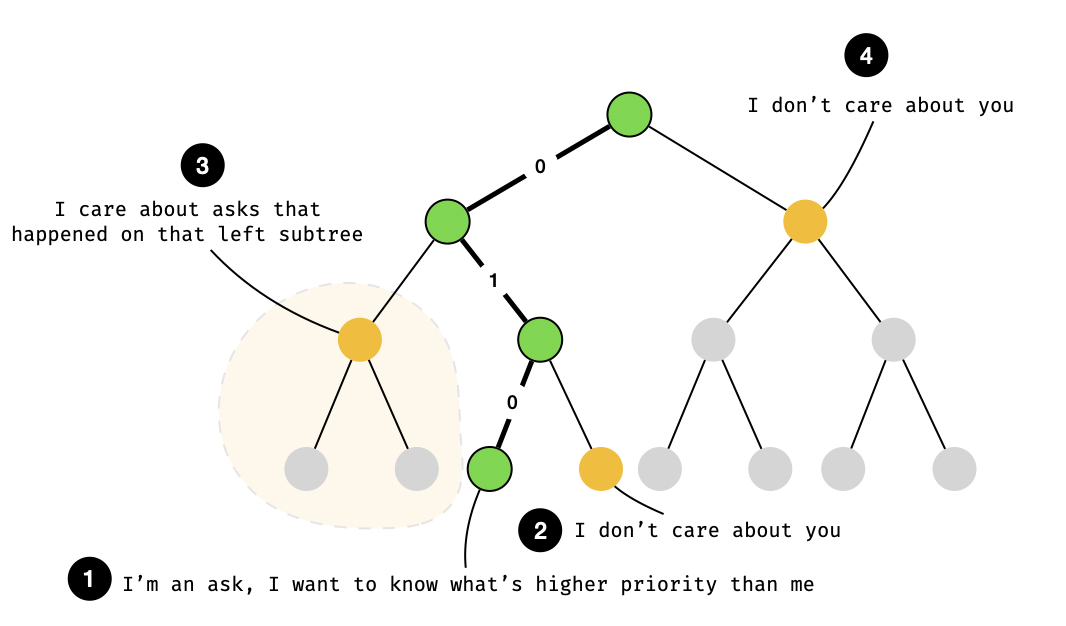

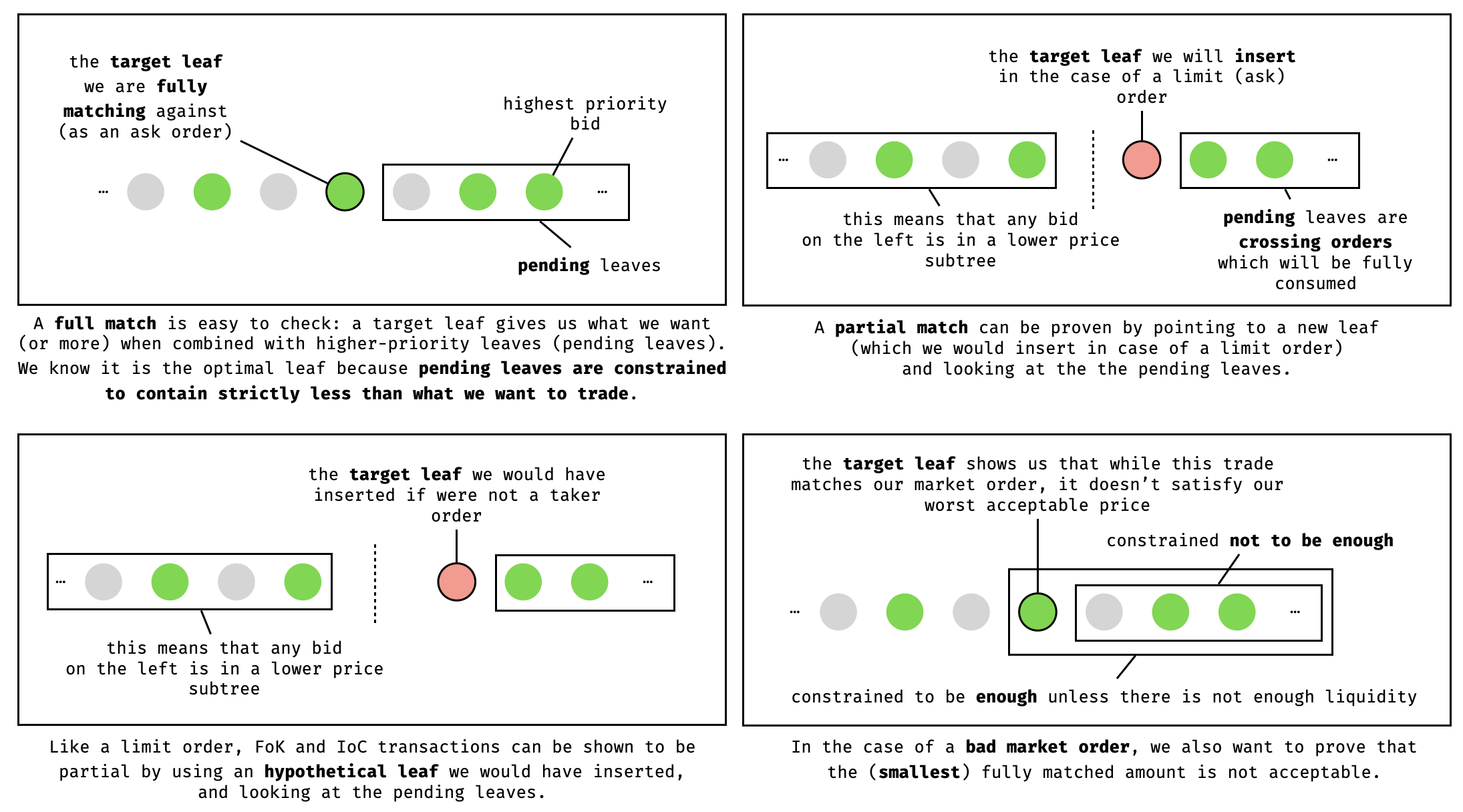

- Target leaf: this is the leaf, that either represents the worst (from the taker’s point of view) and latest maker order we need to match — in the case of a full match, or that we might need to insert in the order book — in the case of a partial match. If we end up inserting, the target leaf represents the same type of order as the user’s order transaction.

- Pending leaves: these are all the leaves that an order could fully consume to partially or fully complete a trade, and which are either higher-priority than the target leaf in case they are of the same type, or crossing orders in case they are of different types (due to the way bids and asks are arranged in a price subtree).

Note that pending leaves are always constrained to be less than the size of the order transaction. This is an important property as it is used to prove optimality of the matching for different order types. Intuitively, this property allows us to position ourselves at the right spot in the order tree: right in-between fully matching and not fully matching.

We now review the different types of order transactions.

Limit order. This order sets a maximum (resp. minimum) price for a bid (resp. ask). If it cannot be fully matched with existing maker orders in a market, then the remaining amount to match will be inserted in the order book (after being partially matched against as many marker orders as possible).

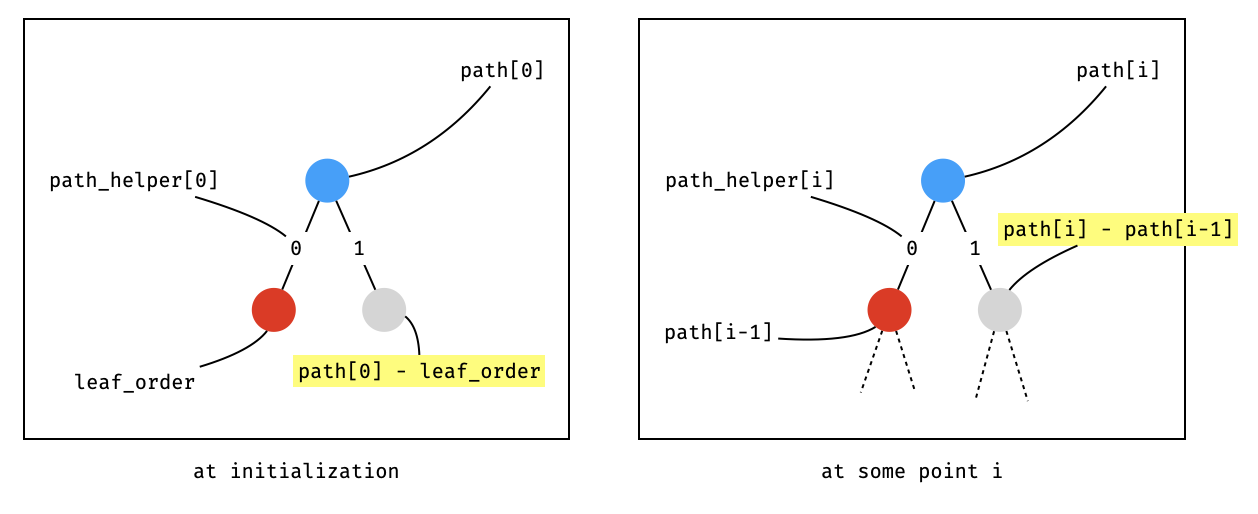

Thanks to the special composition of the internal node of the order book tree (which contains the aggregated bids and quotes of all children nodes), one can use a target leaf and climb up the tree to compute the sum of all pending orders. In the case of a full match, the target leaf and the pending orders should represent the full amount to trade with (and is easy to verify). In the case of a partial match, the crossing orders in between the leaf to insert and the rest of the tree can be verified to be less than the matching amount (and the price will be right because we are in the right price subtree).

We illustrate how one can climb the tree to compute the sum of all pending orders in the diagram below:

Interestingly, one can guess the aggregated sums contained in a neighboring subtree without witnessing a preimage of the neighboring node (avoiding having to compute a hash): it can be derived from the parent and the current child, as illustrated in the next diagram:

In both situations, the register is set to ExecutePendingTrade and given the remaining amount as “pending size” to claim from the order tree. Enough claim transactions are inserted by the sequencer to reduce that pending size to 0, where each claim transaction consumes the current highest priority order (we can check via the internal nodes that no other higher-priority transactions exist). Once the pending size is decreased to 0, the register can be reset to accept the next user transaction.

Market order. This order is similar to a limit order, but does not end up in the order book. As such it is a taker order (as it does not “make” the market).

For full matching, which is what is expected of a market order, the same kind of target leaf as a full matching with a limit order is expected. If everything goes right the rest of the protocol follows the same logic as a successful limit order.

Market orders have two valid scenarios where they can fail. In such situations, the prover must still be able to process them and prove that there was no possible way to process the orders, as some orders are priority orders which MUST be fulfiled otherwise the protocol’s exit hatch is triggered (see later).

The first edge case is that there is not enough liquidity in the order book to match the trade, this is easy to prove as the total liquidity is contained in the root node of the order tree.

The second edge case is when the trade is matched but at a non-desirable price: each market order is explicitly associated with a slippage value which dictates the worst price at which to accept a trade. If such a case is met, the prover provides a similar target leaf as in the limit order case. As the sum of pending leaves is always constrained to be lower than the order’s size, the circuit can check that the market order is right in-between the target leaf and the pending leaves.

Fill-or-kill (FoK) order. This is a taker order, that is a no-op if it cannot be fully matched against a set of existing maker orders.

FoK orders follow the same strategy as limit orders, except that the process of claim is not started unless there’s a full match.

Immediate-or-Cancel order. This is another taker order that can match against several maker orders and does not end up in the order book if only partially matched.

They follow the same strategy as the previous FoK orders, except that the process of claim is still started if there isn’t a full match.

We recapitulate some of the detail in the following diagram:

Exit Hatch Mode

The Lighter smart contract supposedly (as we only looked at the circuit-side of things) has two modes of operation: a normal mode of operation, and a fallback mode (called “desert” in the code) that becomes the persistent default mode in event something bad happens. “Something bad” is defined in the paper to include, for example, not being able to process priority transactions in a timely manner.

Once the exit hatch is activated, the only proofs accepted change to a so-called “desert” proof which only allows users to exit the application and withdraw any funds locked in the application. These proofs can be generated by users as long as they know the state, which can be reconstructed (as previously discussed) by using the data exposed publicly by all state transitions that the smart contract processed.

The proof is on the execution of a simple circuit:

- Check that the state tree correctly authenticates an asset in an account (by doing a bunch of merkle proof verifications).

- As public inputs, expose:

- The account index: so we can save that event and enforce a single exit per account.

- The account L1 address: so we know how to route the unlocked funds.

- The asset ID and asset balance: to know what kind of token and how much to unlock from the lighter app.

- The state root: so we can verify that the execution was done against the correct state saved in the smart contract.

For completion, in pseudo-code:

def verify_desert(public, private):

# 1. verify state root based on account and validium root

new_state_root = mimc(private.account, private.validium_root)

assert(private.state_root == new_state_root)

# construct public data

pub_data = [

to_bytes(private.tx.account_index, 32/8),

to_bytes(private.tx.asset_id, 16/8),

to_bytes(private.tx.asset_amount, 128/8),

to_bytes(private.tx.L1_address, 160/8),

]

# verify provided exit tx

assert(private.tx.L1_address == private.account_info.L1_address)

assert(private.tx.account_idx == private.account_info.account_idx)

assert(private.tx.asset_id == private.account_info.assets_info.asset_id)

assert(private.tx.asset_amount == private.account_info.assets_info.asset_amount)

# sanitize assets info

assert(private.account_info.assets_info.asset_id < 2^16)

# verify account asset root

asset_merkle_helper = to_binary(private.account_info.assets_info.asset_id, 16)

asset_node_hash = mimc(private.account_info.assets_info.balance)

Merkle.verify(private.account_info.asset_root, asset_node_hash, private.merkle_proofs_account_asset, asset_merkle_helper)

# sanitize account info

assert(private.account_info.account_index < 2^32)

# verify account root

account_index_merkle_helper = to_binary(private.account_info.account_index, 32)

account_node_hash = mimc(private.account_info)

Merkle.verify(private.account_root, account_node_hash, private.merkle_proofs_account, account_index_merkle_helper)

# compress public input into public output

pending_commitment_data = append(private.state_root, pub_data)

commitment = sha256(pending_commitment_data)

assert(commitment == public.commitment)